The use of Artificial Intelligence is reshaping every aspect of business, economy, and society by transforming experiences and relationships amongst stakeholders and citizens.

However, the use of AI also poses considerable risks and challenges, raising concerns about whether AI systems are worthy of human trust.

Due to the far-reaching socio-technical consequences of AI, organizations and government bodies have already started implementing frameworks and legislations for enforcing trustworthy AI, like

- the European Union’s “EU AI Act”, the first-ever legal framework on AI, which addresses the risks of AI and

- the U.S. Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence, with directives to enhance safety, privacy, equity and competition.

AI Trust has also been identified by companies like Gartner as one of the top Strategic Technology trends.

Our Mission

Our goal is to make “trustworthy AI” synonymous with “AI”.

We do technically innovative research which helps produce human-centered trustworthy artificial intelligence.

Our lab was founded in response to a surge of both academic and industrial interest in the fairness, transparency, privacy, robustness and interpretability of AI systems. In our research we aim to strengthen the trust of humans in Artificial Intelligence. To establish trust, an AI application must be verifiably designed to function in a manner that mitigates bias, ensures transparency and security, ensures data sovereignty, ensures non-discrimination and promotes societal and environmental well-being.

We share the perspective of Mozilla Foundation that we need to move towards a world of AI that is helpful — rather than harmful — to human beings. This means that (i) human agency is a core part of how AI is built; and (ii) that corporate accountability for AI is real and enforced.

Our aim is to help develop AI technology that is built and deployed in ways that support accountability and agency, and advance individual and collective well-being.

Our Approach

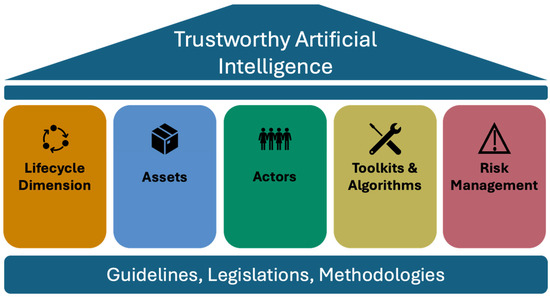

We focus on the issue of “trust” in AI systems and aim to design and develop methods, tools and best practices for the evaluation, design, and development of machine intelligence that provides guarantees for Trustworthy AI systems.

We develop solutions that support and advance trustworthy AI in areas like:

- smart manufacturing systems,

- formal and informal education,

- innovative healthcare,

- green mobility,

- circular industry and

- intelligent public administration.

We develop methods, tools and operational processes for the identification and mitigation of the negative impacts of AI applications and the causes of those impacts.

Our work aims to:

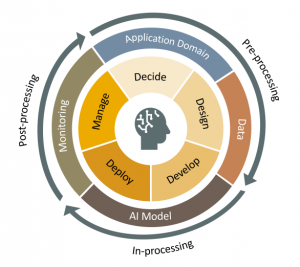

- consider all the stages of the AI system lifecycle

- Incorporate human before/on/over the loop

- is highly affected by the application domain

- accounts for all stakeholders that contribute to/are affected by the development.

In our work we take into account international approaches and standards like:

- The Assessment List for Trustworthy Artificial Intelligence that was developed by the High-Level Expert Group on Artificial Intelligence

- set up by the European Commission to help assess whether an AI system complies with the seven requirements of Trustworthy AI.

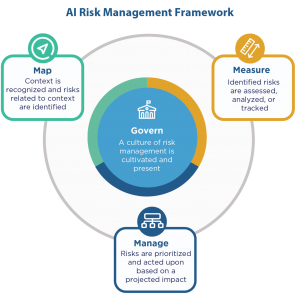

- The NIST AI Risk Management Framework (AI RMF) of the US National Institute of Standards and Technology

- that is intended to improve the ability to incorporate trustworthiness considerations into the design, development, use, and evaluation of AI products, services, and systems; and

- the ISO/IEC 42001:2023 an international standard

- that aims to ensure that AI systems are developed, deployed, and managed in a responsible, transparent, secure, and accountable manner. and

- the ISO/IEC TR 24028:2020 document on trustworthiness in artificial intelligence

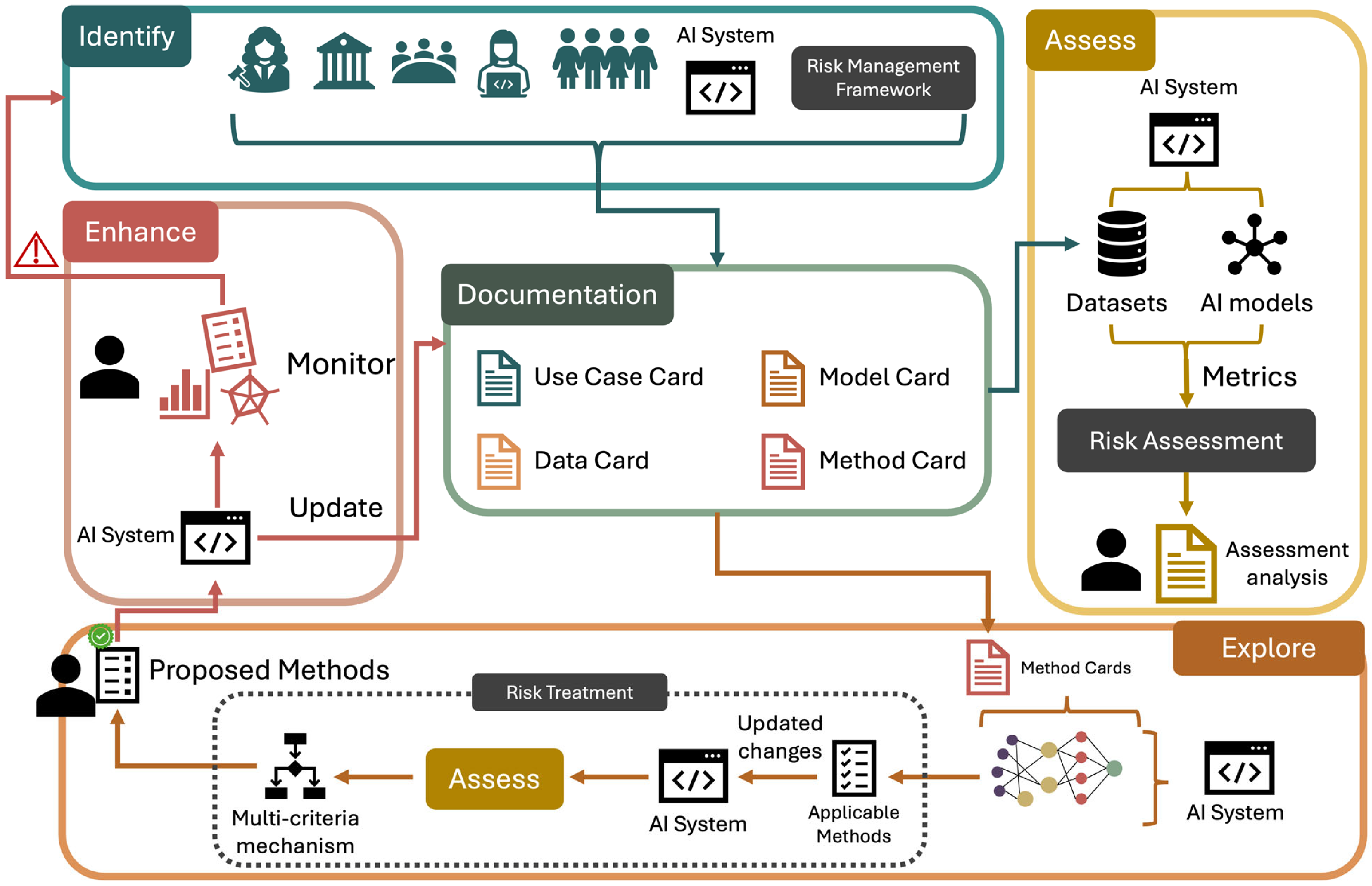

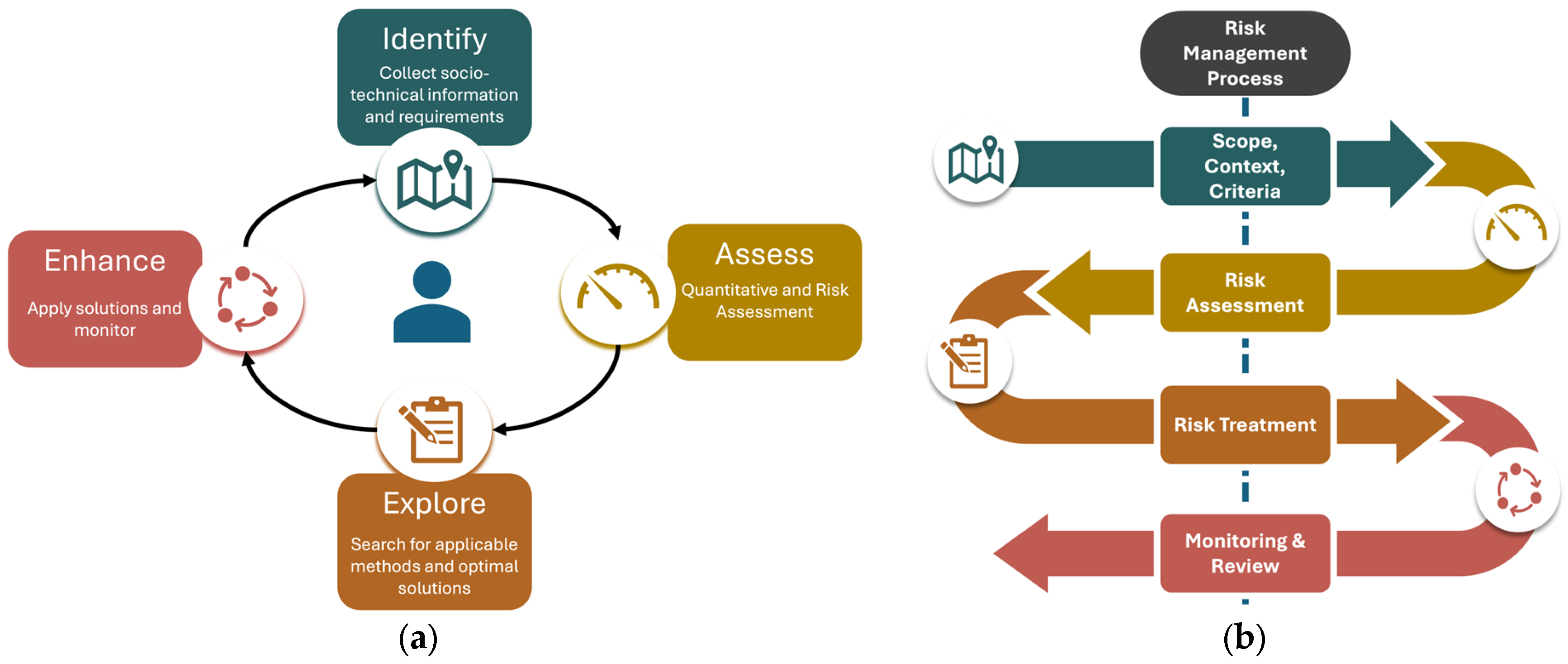

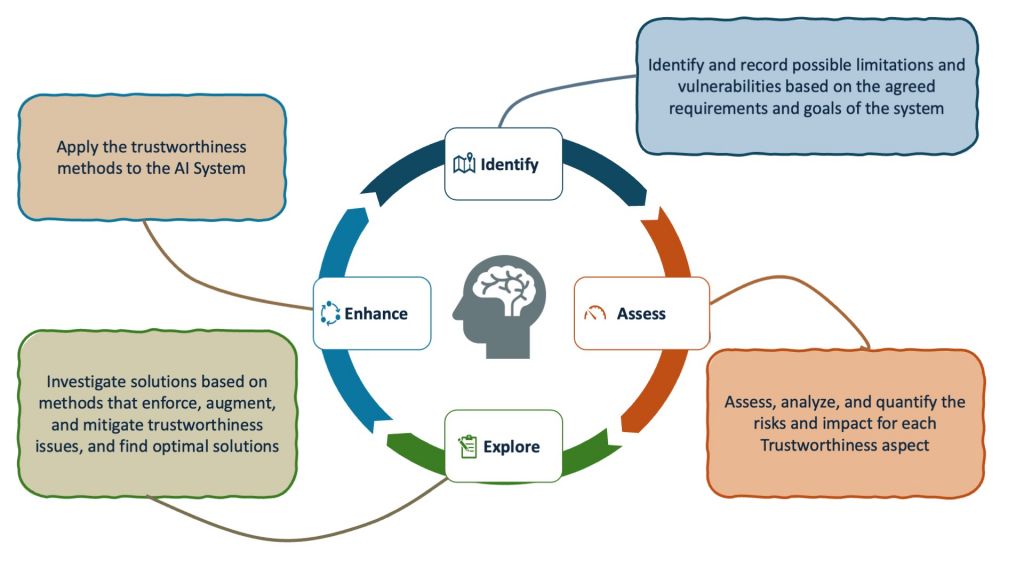

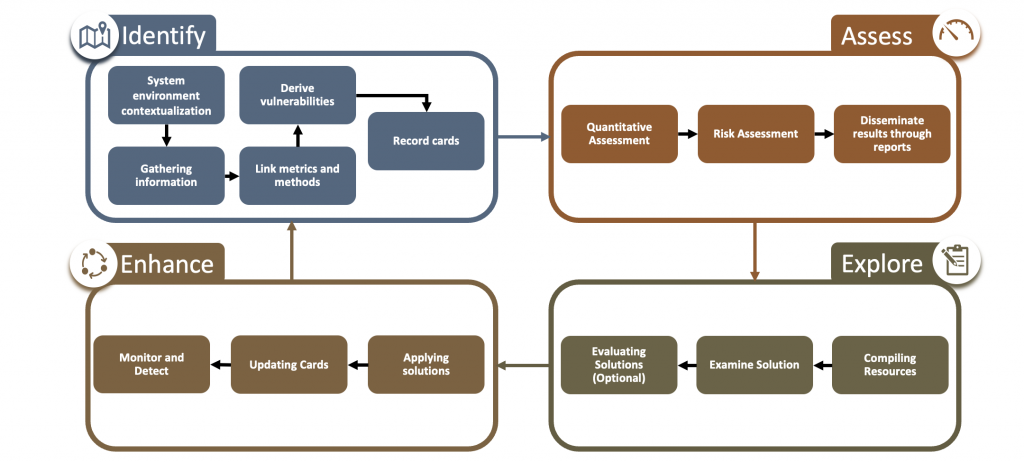

We have adopted a risk-based approach and work on all four phases of the AI Trustworthiness lifecycle:

Identify; Assess; Explore; and Enhance.

Identify and record possible limitations and vulnerabilities based on the agreed requirements and goals of the system

Assess, analyze, and quantify the risks and impact for each Trustworthiness aspect

Explore solutions based on methods that enforce, augment, and mitigate trustworthiness issues

Enhance: based on the previous stages, search for optimal solutions that can be applied to the AI system and enhance its trustworthiness

Ongoing Projects

IIn THEMIS 5.0, the trustworthiness vulnerabilities of the AI-systems are determined using an AI-driven risk assessment approach, which, effectively, translates the directions given in the Trustworthy AI Act and relevant standards into technical implementations. THEMIS 5.0 will innovate in its consideration of the human perspective as well as the wider socio-technical systems’ perspective in the risk management-based trustworthiness evaluation. An innovative AI-driven conversational agent will productively engage humans in intelligent dialogues capable of driving the execution of continuous trustworthiness improvement cycles.

Check out the project’s web site: https://www.themis-trust.eu

FAITH aims to provide the practitioners and stakeholders of AI systems not only with a comprehensive analysis of the foundations of AI trustworthiness but also with an operational playbook for how to assess, and build trustworthy AI systems and how to continuously measure their trustworthiness. FAITH adopts a human-centric, trustworthiness assessment framework (FAITH AI_TAF) which enables the testing/measuring/optimization of risks associated with AI trustworthiness in critical domains. Seven (7) Large Scale Pilot activities in seven (7) critical and diverse domains (robotics, education, media, transportation, healthcare, active ageing, and industry) will validate the FAITH holistic estimation of trustworthiness.

Check out the project’s web site: https://faith-ec-project.eu

Publications on Trustworthy AI

Exploring the Landscape of Trustworthy AI: Status and Challenges

This paper explores the evolution towards trustworthy AI. We start with an examination of the characteristics inherent in trustworthy AI, along with the corresponding principles and standards associated with them. We then examine the methods and tools that are available to designers and developers in their quest to operationalize trusted AI systems. Finally, we outline research challenges towards end-to-end engineering of trustworthy AI by-design.

published in Intelligent Decision Technologies journal, vol. 18, no. 2, pp. 837-854, 2024.

A Card-based Agentic Framework for Supporting Trustworthy AI

In this paper we use and extend the “card-based” approach for documenting AI systems. We collect information through data cards, model cards and use case cards and also propose the use of “methods cards”, which structure technical information about available algorithms, methods and software toolkits. For the development of our software framework, we follow a neuro-symbolic agentic design pattern in which different roles involved in the TAI assessment process can be instantiated by enabling an LLM to be prompted and guided by navigating knowledge graphs, which have been derived from the recorded cards.

presented in the 4th TAILOR Conference – Trustworthy AI from lab to market, 4-5 June 2024 in Lisbon, Portugal

Trustworthy AI in education: A Framework for Ethical and Effective Implementation

This paper explores the importance of AI in education and identifies challenges to trustworthiness based on the ALTAI (Accountability, Liability, Transparency, and Accessibility of Information) framework. Drawing on this analysis, the paper proposes strategies for promoting trust in AI-driven education. By addressing these challenges and implementing the proposed strategies, educational institutions can build an environment of trust, transparency, and accountability in AI-driven education.

published in Proceedings of the 13th Hellenic Conference on Artificial Intelligence (pp. 1-7).

Assessing Trustworthy AI of Voice-enabled Intelligent Assistants for the Operator 5.0

In this paper, we present the application of the Assessment List for Trust-worthy Artificial Intelligence (ALTAI), which was developed by the the European Commission, on a Digital Intelligent Assistant (DIA) for manufacturing. We aim at contributing to the enrichment of ALTAI applications and to drawing remarks regarding its applicability to diverse domains. We also discuss our responses to the ALTAI questionnaire, and present the score and the recommendations derived from the ALTAI web application.

published in the IFIP International Conference on Advances in Production Management Systems (pp. 220-234). Cham: Springer Nature Switzerland.

Trustworthiness Optimisation Process: A Methodology for Assessing and Enhancing Trust in AI Systems

In this paper, we formulate and propose the Trustworthiness Optimisation Process (TOP), which operationalises Trustworthy AI and brings together its procedural and technical approaches throughout the AI system lifecycle. It incorporates state-of-the-art enablers of trustworthiness such as documentation cards, risk management, and toolkits to find trustworthiness methods that increase the trustworthiness of a given AI system. To showcase the application of the proposed methodology, a case study is conducted, demonstrating how the fairness of an AI system can be increased..

published in the journal Electronics, Special Issue on “Future Development of Trustworthy Artificial Intelligence and Applications”

Meet the Team

News

Memberships

As a member of the Big Data Value Association we participate in etami.

etami stands for Ethical and Trustworthy Artificial and Machine Intelligence. By translating European and global principles for ethical AI into actionable and measurable guidelines, tools and methods,

etami focusses on making AI a reliable and trustworthy technology. It does so by focussing on the social, legal and business risks associated with AI systems, rethinking AI lifecycle models to optimise quality in AI, and promoting auditing throughout the system’s life cycle. etami’s solutions are piloted across different domains, and coupled with proper software tooling.

The European Trustworthy AI Foundation is a non-profit organization empowering Industry with state of the art, open-source methodology and tools, enabling the engineering of AI-based systems that can be trusted and comply with regulations.

Its ambition is to propel Europe to the forefront of innovation in trustworthy AI, by making its methodologies and tools an international benchmark and thus, supporting the broader adoption of responsible AI in industry.

CERTAIN stands for “Center for European Research in Trusted AI” and focuses on an approach that highlights the issue of “trust” in AI systems – an aspect that is often neglected in international research.

CERTAIN is a consortium, a collaborative initiative involving various partners, legally part of DFKI, focused on researching, developing, deploying, standardizing, and promoting Trusted AI techniques, with the aim of providing guarantees for and certification of AI systems, and it allows for project collaboration with both internal and external partners.